Least Recently Used (LRU) Cache Algorithm

Get started

জয় শ্রী রাম

🕉

Related Chapter:

LFU Cache

Problem Statement:

Design and implement a data structure for Least Recently Used (LRU) cache. It should support the following operations: get and put.getContent(key) - Get the value of the key if the key exists in the cache.

insertContent(key, value) - Set or insert the value if the key is not already present. When the cache reached its capacity, it should invalidate the least recently used item before inserting a new item.

Solution:

The first and most important purpose for any cache is providing faster and effective way for retrieval of data. So it is required that cache insert and retrieve operations should be fast , preferably O(1) time.As we learnt, The LRU cache evicts the least recently used item first so we are required to :

-

Track the recently used entries.

-

Track the entries which are not used since long time.

-

Additionally, we need to make the insertion, retrieval operations fast.

s we want the faster retrieval operation say taking only O(1) time, HashMap is a very efficient data structure. HashMap provides amortized O(1) for lookup and insertion as well. So HashMap solves the problem of faster operations. Remember that in case of collision the time complexity of HashMap operations worsens to O(total number of items in the hashmap).

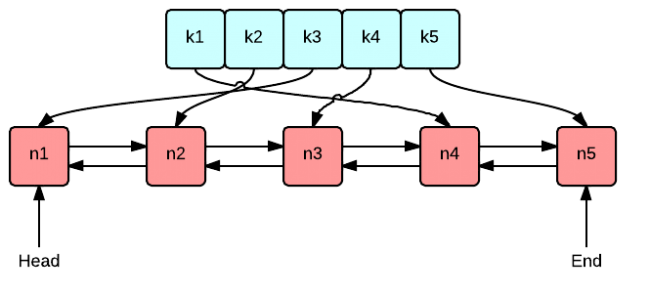

However, HashMap does not provide functionality to track the recently used entries. So we need another data structure which can track the entries and still provide faster operations. The data structure to fulfill this requirement in Doubly Linked List as it has nodes which contains address and Doubly Linked List also takes O(1) for insertion, deletion and updation provided address of node is available. So By combining HashMap and Doubly Linked List, we will implement our LRU Cache in Java. Doubly Linked List will hold the values of the key and HashMap will hold the addresses and keys of the nodes of the list.

We will follow the below approach in our code :

- We would make sure that at all time the head contains the most recently used item and the tail contains the least recently used or LRU item. So we will always remove the item from tail of the doubly linkedlist and will add element at the head of the doubly linkedlist.

Code Implementation:

I have put all the implementation logic in the inline comments in the code below.

Login to Access Content

Related Chapter:

LFU Cache